1/29/2025

Understanding the Ranking System in LLM Arena: How Models Are Evaluated

Artificial Intelligence (AI) and machine learning are transforming the way we interact with technology, especially through Large Language Models (LLMs). However, the rapid advancement of AI also brings challenges when it comes to evaluating these models effectively. That's where LLM Arena comes into play, offering an innovative ranking system that lets users assess various LLMs based on their performance during interactive competitions. Let's dive deep into understanding how LLMs are evaluated and ranked in the LLM Arena.

What is LLM Arena?

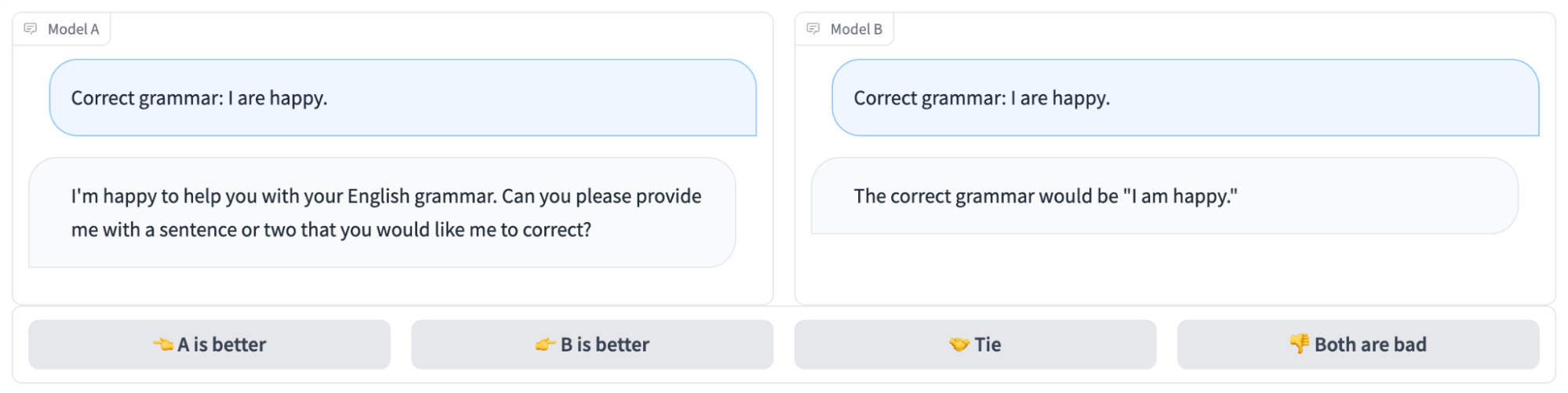

LLM Arena is essentially a benchmarking platform designed to help the AI community understand how well different LLMs are performing. Instead of relying on static benchmarks, which often lead to overfitting and other issues, LLM Arena promotes a more dynamic evaluation method. This space enables models to compete directly, allowing users to vote on their preferences and experiences using various LLMs. When you think about it, it resembles competitive sports where participants are ranked based on performance rather than just theory.

Why Use Competitions for Evaluation?

1. Dynamic Testing Environment

The traditional evaluation methods for AI often lack a dynamic environment. For instance, using static or offline datasets can lead to biased results. Competitions, on the other hand, allow for real-time assessment under diverse conditions, reflecting how models would perform with real-world inputs.

2. Crowdsourced Feedback

By utilizing crowdsourced feedback, LLM Arena taps into the collective knowledge of users. Their votes reflect diverse opinions, highlighting strengths & weaknesses that might not be evident through automated metrics. The Elo rating system, for instance, ranks models based on user preferences—much like in chess tournaments.

3. Encouraging Continuous Improvement

When models are regularly evaluated in a competitive setting, it motivates developers to continuously enhance their offerings. The competitive nature of LLM Arena drives innovation that benefits all users in the ecosystem.

The Elo Rating System: A Closer Look

How Elo Works

At the core of the LLM Arena ranking system is the Elo rating system. Initially developed for chess, this rating system determines the relative skill levels of players (in this case, models) based on their performance in pairwise competitions. Here's how it works:

- Initial Ratings: Each LLM begins with a standard Elo rating. As of the blog post from LMSYS, the initial set of models had ratings ranging from around 932 to 1169.

- Pairwise Competitions: Models are placed head-to-head. Based on user votes, each model's rating is adjusted. If a lower-rated model wins against a higher-rated model, it gains more points than if the roles were reversed.

- Mathematical Update: The ratings are updated using the following formulas:

- Expected Score (for Player A):

- Updating Player's Rating:

- Expected Score (for Player A):

This mathematical method ensures that rankings are both fair & reflective of a model's actual performance across a wide variety of tasks.

Benefits of Using Elo for LLM Ranking

- Scalability: The Elo system can handle large numbers of models without needing extensive datasets for every possible pairing.

- Incrementality: Models can be evaluated and ranked on-the-go with fewer trials.

- Unique Ordering: Unlike other metrics, the Elo system allows for distinguishing which model ranks higher based on actual performance rather than prescriptive measure.

Factors That Influence Rankings

1. User Engagement

In LLM Arena, user engagement significantly impacts rankings. The more users test and vote on specific models, the more data is collected for the Elo system to refine the rankings. This engagement is crucial for maintaining a lively ecosystem.

2. Prompts and Responses

The nature of the prompts presented to models plays a pivotal role. Models are evaluated based on how well they respond to various prompts, from simple Q&A tasks to more complex, multi-faceted queries. Models with high adaptability and comprehension tend to score better.

3. Model Type

Different types of models may excel in various categories depending on their design. For instance, a chat-focused model might perform exceptionally well in conversational prompts but may not be as effective in technical or coding tasks. Here are some model types currently ranked in the LLM Arena Leaderboard:

- Vicuna - 13B

- Koala - 13B

- OASST-Pythia - 12B

- ChatGLM - 6B

4. Updates to Models

As models undergo updates, their performance could change. Continuous evaluations in LLM Arena keep track of these adjustments, helping users identify the best-performing versions available.

Community Involvement in LLM Arena

Building a strong, sustainable AI community requires active participation from users. The LLM Arena is open for everyone, encouraging users to contribute models & evaluate responses. By voting on models based on their experiences, users help shape the development landscape while also gaining insights from the data shared in the leaderboards.

1. Join the Community

You can join ongoing competitions and participate in voting by visiting the Chatbot Arena. It’s about more than just rankings; it’s about building a Better Tomorrow in AI together.

2. Submit Your Models

If you’re a developer or researcher, consider sharing your models in the arena. Models can be submitted following certain guidelines, ensuring they undergo fair testing and ranking, giving the community a broader set of tools to evaluate.

Arsturn: Elevate Your Chatbot Experience

Speaking of models and AI, if you're keen on diving into that world, consider using Arsturn. With Arsturn, you can INSTANTLY create CUSTOM ChatGPT chatbots tailored to your needs. Whether it's for customer engagement or enhancing your brand's social media presence, Arsturn allows you to connect with your audience effectively.

Benefits of Using Arsturn Include:

- No Code Required: Design & customize your chatbot effortlessly without needing any coding skills.

- Full Customization: Integrate your UNIQUE brand identity into the chatbot experience.

- Instant Engagement: Provide your audience with prompt answers, increasing satisfaction & boosting retention rates.

Try It Out!

Claim your chatbot today with no need for credit card info. It’s time to enhance your digital presence and engage effectively with YOUR audience.

Conclusion

Understanding the ranking system in LLM Arena allows developers, users, and enthusiasts alike to navigate the complex landscape of AI models more smoothly. The innovative use of the Elo rating system, coupled with dynamic competitions and user engagement, has cultivated a robust framework for measuring performance. As technology continues to evolve, staying engaged with platforms like LLM Arena—and tools like Arsturn—will be crucial for anyone wanting to leverage AI effectively.

Let’s continue to shape the future of AI together—one conversation at a time!